| Panel | |

|---|---|

|

...

Rclone: v1.58.1 or higher needs to be installed on server where the Universal Agent is installed.

Rclone can be installed on Windows and Linux

To install Rclone on Linux systems, run:

Code Block language py linenumbers true curl https://rclone.org/install.sh | sudo bash

Note title Note If the URL is not reachable from your server, the Linux installation also can be done from pre-compiled binary.

To install Rclone on Linux system from a pre-compiled binary:

Fetch and unpack

Code Block language py linenumbers true curl -O <https://downloads.rclone.org/rclone-current-linux-amd64.zip> unzip rclone-current-linux-amd64.zip cd rclone-*-linux-amd64

Copy binary file

Code Block language py linenumbers true sudo cp rclone /usr/bin/ sudo chown root:root /usr/bin/rclone sudo chmod 755 /usr/bin/rclone

Install manpage

Code Block language py linenumbers true sudo mkdir -p /usr/local/share/man/man1 sudo cp rclone.1 /usr/local/share/man/man1/ sudo mandb

To install Rclone on Windows systems:

Rclone is a Go program and comes as a single binary file.

Download the relevant binary here.

Extract the rclone or rclone.exe binary from the archive into a folder, which is in the windows path

...

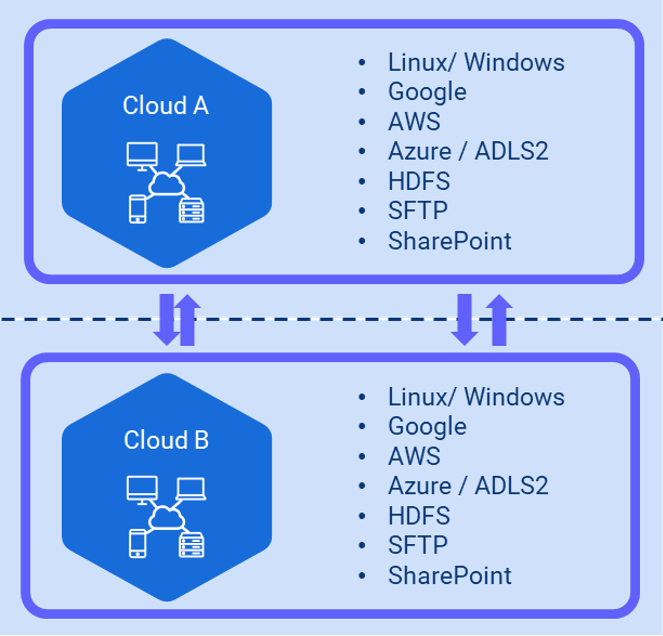

Transfer data to, from, and between any cloud provider

Transfer between any major storage applications like SharePoint or Dropbox

Transfer data to and from a Hadoop File System (HDFS)

Download a URL's content and copy it to the destination without saving it in temporary storage

- Data is streamed from one object store to another (no intermediate storage)

- Horizontal scalable by allowing multiple parallel file transfers up to the number of cores in the machine

- Supports Chunking - Larger files can be divided into multiple chunks

Preserves always timestamps and verifies checksums

Supports encryption, caching, and compression

Perform Dry-runs

Dynamic Token updates for SharePoint connections

Regular Expression based include/exclude filter rules

Supported actions are:

List objects,

List directory,

- Copy / Move

Copy To / Move To

- Sync Folders

Remove object / object store

Perform Dry-runs

Monitor object, including triggering of Tasks and Workflows

Copy URL

Import Inter-Cloud Data Transfer Universal Template

...

- This Universal Task requires the Resolvable Credentials feature. Check that the Resolvable Credentials Permitted system property has been set to true.

- Download the provided ZIP file (for example, from the Stonebranch Integration hub).

- In the Universal Controller UI, select Configuration > Universal Templates to display the current list of Universal Templates.

- Click Import Template.

Select the template ZIP file and Import..

...

Configure the connection file.

Create a new Inter-Cloud Data Transfer Task.

| Anchor | ||||

|---|---|---|---|---|

|

...

| Code Block | ||||||

|---|---|---|---|---|---|---|

| ||||||

# Script Name: connectionv3connectionv4.conf # # Description: # Connection Script file for Inter-Cloud Data Transfer Task # # # 09.03.2022 Version 1 Initial Version requires UA 7.2 # 09.03.2022 Version 2 SFTP support # 27.04.2022 Version 3 Azure target mistake corrected # 13.05.2022 Version 4 Copy_Url added # [amazon_s3_target] type = s3 provider = AWS env_auth = false access_key_id = ${_credentialUser('${ops_var_target_credential}')} secret_access_key = ${_credentialPwd('${ops_var_target_credential}')} region = us-east-2 acl = bucket-owner-full-control [amazon_s3_source] type = s3 provider = AWS env_auth = false access_key_id = ${_credentialUser('${ops_var_source_credential}')} secret_access_key = ${_credentialPwd('${ops_var_source_credential}')} region = us-east-2 acl = bucket-owner-full-control role_arn = arn:aws:iam::552436975963:role/SB-AWS-FULLX [microsoft_azure_blob_storage_sas_source] type = azureblob sas_url = ${_credentialPwd('${ops_var_source_credential}')} [microsoft_azure_blob_storage_sas_target] type = azureblob sas_url = ${_credentialPwd('${ops_var_target_credential}')} [microsoft_azure_blob_storage_source] type = azureblob account = ${_credentialUser('${ops_var_source_credential}')} key = ${_credentialPwd('${ops_var_source_credential}')} [microsoft_azure_blob_storage_target] type = azureblob account = ${_credentialUser('${ops_var_target_credential}')} key = ${_credentialPwd('${ops_var_target_credential}')} [datalakegen2_storage_source] type = azureblob account = ${_credentialUser('${ops_var_source_credential}')} key = ${_credentialPwd('${ops_var_source_credential}')} [datalakegen2_storage_target] type = azureblob account = ${_credentialUser('${ops_var_target_credential}')} key = ${_credentialPwd('${ops_var_target_credential}')} [datalakegen2_storage_sp_source] type = azureblob account = ${_credentialUser('${ops_var_source_credential}')} service_principal_file = ${_scriptPath('azure-principal.json')} # service_principal_file = C:\virtual_machines\Setup\SoftwareKeys\Azure\azure-principal.json [datalakegen2_storage_sp_target] type = azureblob account = ${_credentialUser('${ops_var_target_credential}')} service_principal_file = ${_scriptPath('azure-principal.json')} # service_principal_file = C:\virtual_machines\Setup\SoftwareKeys\Azure\azure-principal.json [google_cloud_storage_source] type = google cloud storage service_account_file = ${_credentialPwd('${ops_var_source_credential}')} object_acl = bucketOwnerFullControl project_number = clagcs location = europe-west3 [google_cloud_storage_target] type = google cloud storage service_account_file = ${_credentialPwd('${ops_var_target_credential}')} object_acl = bucketOwnerFullControl project_number = clagcs location = europe-west3 [onedrive_source] type = onedrive token = ${_credentialToken('${ops_var_source_credential}')} drive_id = ${_credentialUser('${ops_var_source_credential}')} drive_type = business update_credential = token [onedrive_target] type = onedrive token = ${_credentialToken('${ops_var_target_credential}')} drive_id = ${_credentialUser('${ops_var_target_credential}')} drive_type = business update_credential = token [hdfs_source] type = hdfs namenode = 172.18.0.2:8020 username = maria_dev [hdfs_target] type = hdfs namenode = 172.18.0.2:8020 username = maria_dev [linux_source] type = local [linux_target] type = local [windows_source] type = local [windows_target] type = local [sftp_source] type = sftp host = 3.1019.1076.58 user = ubuntu pass = ${_credentialToken('${ops_var_source_credential}')} [sftp_target] type = sftp host = 3.1019.1076.58 user = ubuntu pass = ${_credentialToken('${ops_var_target_credential}')} [copy_url] |

Considerations

Rclone supports connections to almost any storage system on the market:

...

| Field | Description |

Agent | Linux or Windows Universal Agent to execute the Rclone command line. |

Agent Cluster | Optional Agent Cluster for load balancing. |

Action | [ list directory, copy, list objects, move, remove-object, remove-object-store, create-object-store, copy-url, monitor-object ] Move objects from source to target. |

Source | Enter a source storage Type name as defined in the Connection File; for example, amazon_s3, microsoft_azure_blob_storage, hdfs, onedrive, linux .. For a list of all possible storage types, refer to Overview of Cloud Storage Systems. |

Target | Enter a target storage Type name as defined in the Connection File; for example, amazon_s3, microsoft_azure_blob_storage, hdfs, onedrive, linux .. For a list of all possible storage types, refer to Overview of Cloud Storage Systems. |

Connection File | In the connection file you configure all required Parameters and Credentials to connect to the Source and Target Cloud Storage System. For example, if you want to transfer a file from AWS S3 to Azure Blob Storage, you must configure the connection Parameters for AWS S3 and Azure Blob Storage. For details on how to configure the Connection File, refer to section Configure the Connection File. |

Filter Type | [ include, exclude, none ] Define the type of filter to apply. |

Filter | Provide the Patterns for matching file matching; for example, in a copy action: Filter Type: include

For more examples on the filter matching pattern, refer to Rclone Filtering. |

Other Parameters | This field can be used to apply additional flag parameters to the selected action. For a list of all possible flags, refer to Global Flags. Recommendation: Add the flag max-depth 1 to all Copy, Move, remove-object and remove-object-store in the task field Other Parameters to avoid a recursive action. Attention: If the flag max-depth 1 is not set, a recursive action is performed. |

Dry-run | [ checked , unchecked ] Do a trial run with no permanent changes. |

UAC Rest Credentials | Universal Controller Rest API Credentials. |

UAC Base URL | Universal Controller URL. For example, https://192.168.88.40/uc |

Loglevel | Universal Task logging settings [DEBUG | INFO| WARNING | ERROR | CRITICAL]. |

...

| Field | Description |

Agent | Linux or Windows Universal Agent to execute the Rclone command line. |

Agent Cluster | Optional Agent Cluster for load balancing. |

Action | [ list directory, copy, list objects, move, remove-object, remove-object-store, create-object-store, copy-url, monitor-object ] Remove objects in an OS directory or cloud object store. |

Storage Type | Enter a storage Type name as defined in the Connection File; for example, amazon_s3, microsoft_azure_blob_storage, hdfs, onedrive, linux .. For a list of all possible storage types, refer to Overview of Cloud Storage Systems. |

| File Path | Path to the directory in which you want to remove the objects. For example: File Path: stonebranchpmtest Filter: report[1-3].txt This removes all S3 objects matching the filter: report[1-3].txt( report1.txt, report2.txt and report3.txt ) from the S3 bucket stonebranchpmtest. |

Connection File | In the connection file you configure all required Parameters and Credentials to connect to the Source and Target Cloud Storage System. For example, if you want to transfer a file from AWS S3 to Azure Blob Storage, you must configure the connection Parameters for AWS S3 and Azure Blob Storage. For details on how to configure the Connection File, refer to section Configure the Connection File. |

Other Parameters | This field can be used to apply additional flag parameters to the selected action. For a list of all possible flags, refer to Global Flags. Recommendation: Add the flag max-depth 1 to all Copy, Move, remove-object and remove-object-store in the task field Other Parameters to avoid a recursive action. Attention: If the flag max-depth 1 is not set, a recursive action is performed. |

Dry-run | [ checked , unchecked ] Do a trial run with no permanent changes. |

UAC Rest Credentials | Universal Controller Rest API Credentials. |

UAC Base URL | Universal Controller URL. For example, https://192.168.88.40/uc |

Loglevel | Universal Task logging settings [DEBUG | INFO| WARNING | ERROR | CRITICAL]. |

...

| Field | Description |

Agent | Linux or Windows Universal Agent to execute the Rclone command line. |

Agent Cluster | Optional Agent Cluster for load balancing. |

Action | [ list directory, copy, list objects, move, remove-object, remove-object-store, create-object-store, copy-url, monitor-object ] Remove an OS directory or cloud object store. |

Storage Type | Enter a storage Type name as defined in the Connection File; for example, amazon_s3, microsoft_azure_blob_storage, hdfs, onedrive, linux .. For a list of all possible storage types, refer to Overview of Cloud Storage Systems. |

| Directory | Name of the directory you want to remove. The directory can be an object store or a file system OS directory. The directory needs to be empty before it can be removed. For example, Directory: stonebranchpmtest would remove the bucket stonebranchpmtest. |

Connection File | In the connection file, you configure all required Parameters and Credentials to connect to the Source and Target Cloud Storage System. For example, if you want to transfer a file from AWS S3 to Azure Blob Storage, you must configure the connection Parameters for AWS S3 and Azure Blob Storage. For details on how to configure the Connection File, refer to section Configure the Connection File. |

Other Parameters | This field can be used to apply additional flag parameters to the selected action. For a list of all possible flags, refer to Global Flags. Recommendation: Add the flag max-depth 1 to all Copy, Move, remove-object and remove-object-store in the task field Other Parameters to avoid a recursive action. Attention: If the flag max-depth 1 is not set, a recursive action is performed. |

Dry-run | [ checked , unchecked ] Do a trial run with no permanent changes. |

UAC Rest Credentials | Universal Controller Rest API Credentials. |

UAC Base URL | Universal Controller URL. For example, https://192.168.88.40/uc |

Loglevel | Universal Task logging settings [DEBUG | INFO| WARNING | ERROR | CRITICAL]. |

...