...

...

...

...

...

...

| Warning | ||

|---|---|---|

| ||

It is replaced by: |

| Panel | |

|---|---|

|

Disclaimer

Your use of this download is governed by Stonebranch’s Terms of Use, which are available at https://www.stonebranch.com/integration-hub/Terms-and-Privacy/Terms-of-Use/

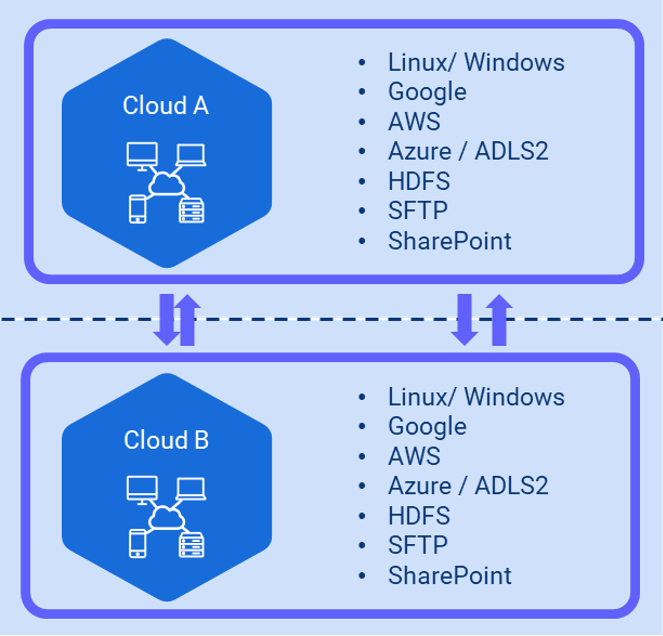

Overview

The Inter-Cloud Data Transfer integration allows you to transfer data to, from, and between any of the major private and public cloud providers like AWS, Google Cloud, and Microsoft Azure.

...

- AWS S3

- Google Cloud

- Sharepoint

- Dropbox

- OneDrive

- Hadoop Distributed File Storage (HDFS)

Software Requirements

Software Requirements for Universal Agent

Universal Agent for Linux or Windows Version 7.2.0.0 or later is required.

Universal Agent needs to be installed with python option (--python yes).

Software Requirements for Universal Controller

Universal Controller 7.2.0.0 or later.

Software Requirements for the Application to be Scheduled

Rclone: v1.58.1 or higher needs to be installed on server where the Universal Agent is installed.

Rclone can be installed on Windows and Linux

To install Rclone on Linux systems, run:

Code Block language py linenumbers true curl https://rclone.org/install.sh | sudo bash

Note title Note If the URL is not reachable from your server, the Linux installation also can be done from pre-compiled binary.

To install Rclone on Linux system from a pre-compiled binary:

Fetch and unpack

Code Block language py linenumbers true curl -O <https://downloads.rclone.org/rclone-current-linux-amd64.zip> unzip rclone-current-linux-amd64.zip cd rclone-*-linux-amd64

Copy binary file

Code Block language py linenumbers true sudo cp rclone /usr/bin/ sudo chown root:root /usr/bin/rclone sudo chmod 755 /usr/bin/rclone

Install manpage

Code Block language py linenumbers true sudo mkdir -p /usr/local/share/man/man1 sudo cp rclone.1 /usr/local/share/man/man1/ sudo mandb

To install Rclone on Windows systems:

Rclone is a Go program and comes as a single binary file.

Download the relevant binary here.

Extract the rclone or rclone.exe binary from the archive into a folder, which is in the windows path

Key Features

Some details about the Inter-Cloud Data Transfer Task:

Transfer data to, from, and between any cloud provider

Transfer between any major storage applications like SharePoint or Dropbox

Transfer data to and from a Hadoop File System (HDFS)

Download a URL's content and copy it to the destination without saving it in temporary storage

- Data is streamed from one object store to another (no intermediate storage)

- Horizontal scalable by allowing multiple parallel file transfers up to the number of cores in the machine

- Supports Chunking - Larger files can be divided into multiple chunks

Preserves always timestamps and verifies checksums

Supports encryption, caching, and compression

Perform Dry-runs

Dynamic Token updates for SharePoint connections

Regular Expression based include/exclude filter rules

Supported actions are:

List objects

List directory

- Copy / Move

Copy To / Move To

- Sync Folders

Remove object / object store

Perform Dry-runs

Monitor object, including triggering of Tasks and Workflows

Copy URL

Import Inter-Cloud Data Transfer Universal Template

To use this downloadable Universal Template, you first must perform the following steps:

- This Universal Task requires the Resolvable Credentials feature. Check that the Resolvable Credentials Permitted system property has been set to true.

- Download the provided ZIP file (for example, from the Stonebranch Integration hub).

- In the Universal Controller UI, select Configuration > Universal Templates to display the current list of Universal Templates.

- Click Import Template.

Select the template ZIP file and Import..

...

- To import the Universal Template into your Controller, follow the instructions here.

- When the files have been imported successfully, refresh the Universal Templates list; the Universal Template will appear on the list.

...

Configure Inter-Cloud Data Transfer Universal Tasks

To configure a new Inter-Cloud Data Transfer there are two steps required:

Configure the connection file.

Create a new Inter-Cloud Data Transfer Task.

| Anchor | ||||

|---|---|---|---|---|

|

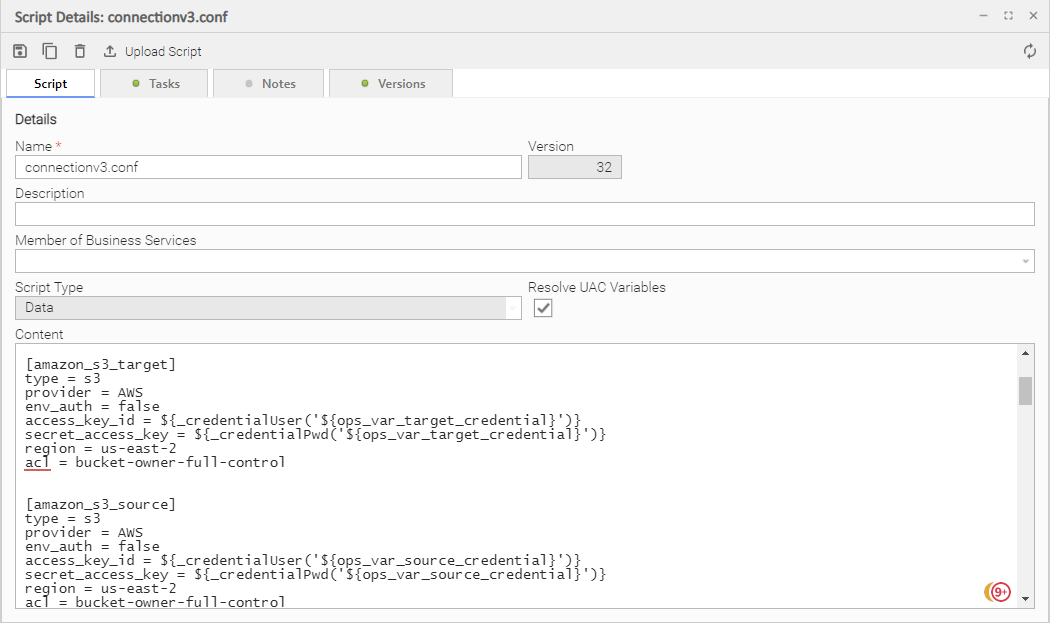

The connection file contains all required Parameters and Credentials to connect to the Source and Target Cloud Storage System.

...

The following connection file must be saved in the Universal Controller script library. This file is later referenced in the different Inter-Cloud Data Transfer tasks.

connections.conf (v4)

| Code Block | ||||||

|---|---|---|---|---|---|---|

| ||||||

# Script Name: connectionv4.conf

#

# Description:

# Connection Script file for Inter-Cloud Data Transfer Task

#

#

# 09.03.2022 Version 1 Initial Version requires UA 7.2

# 09.03.2022 Version 2 SFTP support

# 27.04.2022 Version 3 Azure target mistake corrected

# 13.05.2022 Version 4 Copy_Url added

#

[amazon_s3_target]

type = s3

provider = AWS

env_auth = false

access_key_id = ${_credentialUser('${ops_var_target_credential}')}

secret_access_key = ${_credentialPwd('${ops_var_target_credential}')}

region = us-east-2

acl = bucket-owner-full-control

[amazon_s3_source]

type = s3

provider = AWS

env_auth = false

access_key_id = ${_credentialUser('${ops_var_source_credential}')}

secret_access_key = ${_credentialPwd('${ops_var_source_credential}')}

region = us-east-2

acl = bucket-owner-full-control

role_arn = arn:aws:iam::552436975963:role/SB-AWS-FULLX

[microsoft_azure_blob_storage_sas_source]

type = azureblob

sas_url = ${_credentialPwd('${ops_var_source_credential}')}

[microsoft_azure_blob_storage_sas_target]

type = azureblob

sas_url = ${_credentialPwd('${ops_var_target_credential}')}

[microsoft_azure_blob_storage_source]

type = azureblob

account = ${_credentialUser('${ops_var_source_credential}')}

key = ${_credentialPwd('${ops_var_source_credential}')}

[microsoft_azure_blob_storage_target]

type = azureblob

account = ${_credentialUser('${ops_var_target_credential}')}

key = ${_credentialPwd('${ops_var_target_credential}')}

[datalakegen2_storage_source]

type = azureblob

account = ${_credentialUser('${ops_var_source_credential}')}

key = ${_credentialPwd('${ops_var_source_credential}')}

[datalakegen2_storage_target]

type = azureblob

account = ${_credentialUser('${ops_var_target_credential}')}

key = ${_credentialPwd('${ops_var_target_credential}')}

[datalakegen2_storage_sp_source]

type = azureblob

account = ${_credentialUser('${ops_var_source_credential}')}

service_principal_file = ${_scriptPath('azure-principal.json')}

# service_principal_file = C:\virtual_machines\Setup\SoftwareKeys\Azure\azure-principal.json

[datalakegen2_storage_sp_target]

type = azureblob

account = ${_credentialUser('${ops_var_target_credential}')}

service_principal_file = ${_scriptPath('azure-principal.json')}

# service_principal_file = C:\virtual_machines\Setup\SoftwareKeys\Azure\azure-principal.json

[google_cloud_storage_source]

type = google cloud storage

service_account_file = ${_credentialPwd('${ops_var_source_credential}')}

object_acl = bucketOwnerFullControl

project_number = clagcs

location = europe-west3

[google_cloud_storage_target]

type = google cloud storage

service_account_file = ${_credentialPwd('${ops_var_target_credential}')}

object_acl = bucketOwnerFullControl

project_number = clagcs

location = europe-west3

[onedrive_source]

type = onedrive

token = ${_credentialToken('${ops_var_source_credential}')}

drive_id = ${_credentialUser('${ops_var_source_credential}')}

drive_type = business

update_credential = token

[onedrive_target]

type = onedrive

token = ${_credentialToken('${ops_var_target_credential}')}

drive_id = ${_credentialUser('${ops_var_target_credential}')}

drive_type = business

update_credential = token

[hdfs_source]

type = hdfs

namenode = 172.18.0.2:8020

username = maria_dev

[hdfs_target]

type = hdfs

namenode = 172.18.0.2:8020

username = maria_dev

[linux_source]

type = local

[linux_target]

type = local

[windows_source]

type = local

[windows_target]

type = local

[sftp_source]

type = sftp

host = 3.19.76.58

user = ubuntu

pass = ${_credentialToken('${ops_var_source_credential}')}

[sftp_target]

type = sftp

host = 3.19.76.58

user = ubuntu

pass = ${_credentialToken('${ops_var_target_credential}')}

[copy_url]

|

connection.conf in the Universal Controller script library

Considerations

Rclone supports connections to almost any storage system on the market:

...

| Note | ||

|---|---|---|

| ||

If you want to connect to a different system (for example, Dropbox), contact Stonebranch for support. |

Create an Inter-Cloud Data Transfer Task

For Universal Task Inter-Cloud Data Transfer, create a new task and enter the task-specific Details that were created in the Universal Template.

The following Actions are supported:

| Action | Description |

list directory | List directories; for example,

|

copy | Copy objects from source to target. |

| copyto | Copies a single object from source to target and allows to rename the object on the target. |

move | Move objects from source to target. |

| moveto | Moves a single object from source to target and allows to rename the object on the target. |

| sync | Sync the source to the destination, changing the destination only. |

list objects | List objects in an OS directory or cloud object store. |

remove-object | Remove objects in an OS directory or cloud object store. |

remove-object-store | Remove an OS directory or cloud object store. |

create-object-store | Create an OS directory or cloud object store. |

copy-url | Download a URL's content and copy it to the destination without saving it in temporary storage. |

monitor-object | Monitor a file or object in an OS directory or cloud object store and, optionally, launch Task(s) when an object is identified by the monitor. |

...

In the following for each task action, the fields will be described and an example is provided.

Important Considerations

Before running a move, moveto, copy, copyto or sync command, you can always try the command by setting the Dry-run option in the Task.

The field

max-depth(recursion depth) limits the recursion depth.max-depth 1means that only the current directory is in scope. This is the default value.Note title Attention If you change max-depth to a value greater than 1, a recursive action is performed. This should be considered in any Copy, Move, sync, remove-object and remove-object-store operations.

Inter-Cloud Data Transfer Actions

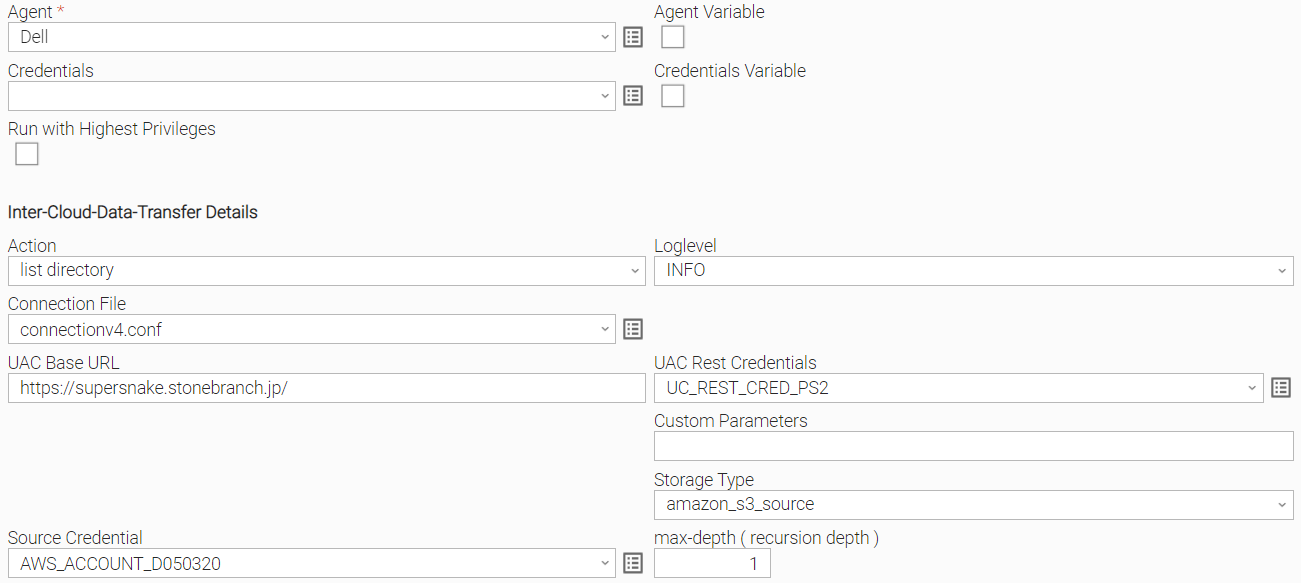

Action: list directory

| Field | Description |

Agent | Linux or Windows Universal Agent to execute the Rclone command line. |

Agent Cluster | Optional Agent Cluster for load balancing. |

Action | [ list directory, copy, list objects, move, remove-object, remove-object-store, create-object-store, copy-url, monitor-object ] list directories; for example,

|

Storage Type | Select the storage type:

|

| Source Credential | Credential used for the selected Storage Type. |

Connection File | In the connection file, you configure all required Parameters and Credentials to connect to the Source and Target Cloud Storage System. For example, if you want to transfer a file from AWS S3 to Azure Blob Storage, you must configure the connection Parameters for AWS S3 and Azure Blob Storage. For details on how to configure the Connection File, refer to section Configure the Connection File 137232401. |

UAC Rest Credentials | Universal Controller Rest API Credentials. |

UAC Base URL | Universal Controller URL; for example, https://192.168.88.40/uc. |

Loglevel | Universal Task logging settings [DEBUG | INFO| WARNING | ERROR | CRITICAL]. |

| Other Parameters | This field can be used to apply additional flag parameters to the selected action. For a list of all possible flags, refer to Global Flags. Recommendation: Add the flag max-depth 1 to avoid a recursive action. |

max-depth ( recursion depth ) | limits the recursion depth

|

Example

The following example list all aws s3 buckets in the AWS account configured in the connection.conf file.

No sub-directories are displayed, because max-depth is set to 1.

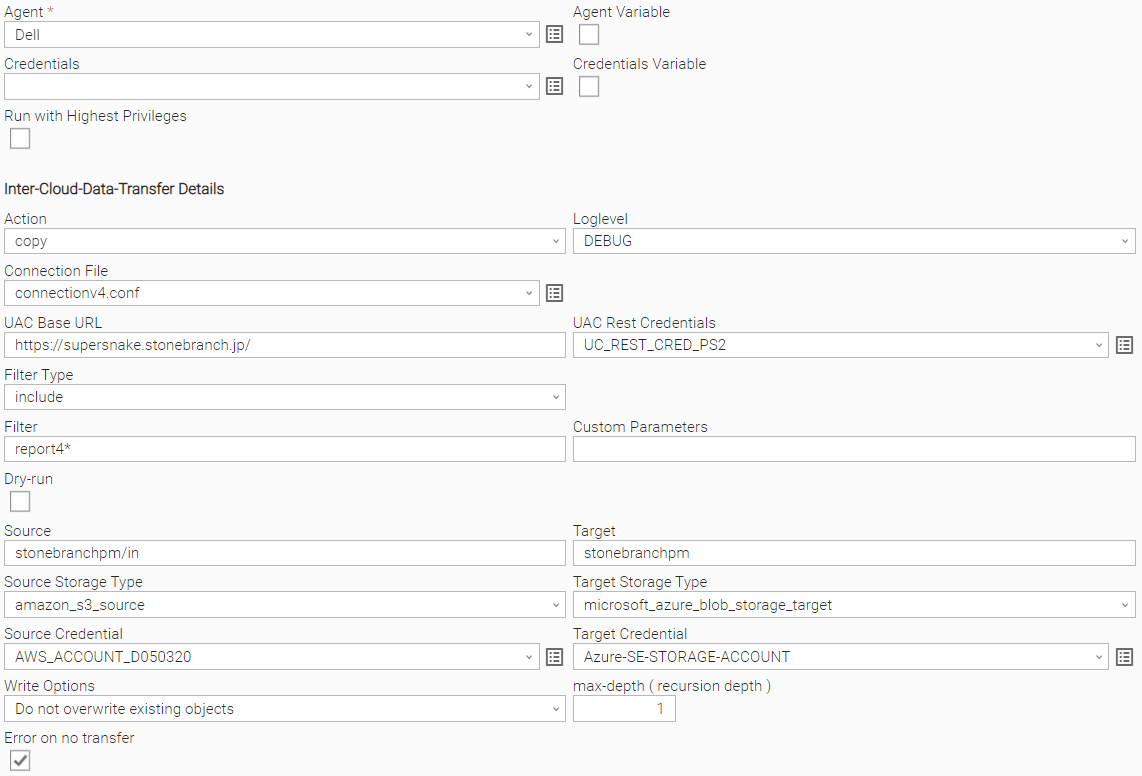

Action: copy

Before running a move, moveto, copy, copyto or sync command, you can always try the command by setting the Dry-run option in the Task.

| Field | Description |

Agent | Linux or Windows Universal Agent to execute the Rclone command line. |

Agent Cluster | Optional Agent Cluster for load balancing. |

Action | [ list directory, copy, copyto, list objects, move, moveto, sync, remove-object, remove-object-store, create-object-store, copy-url, monitor-object ] Copy objects from source to target. |

| Write Options | Do not overwrite existing objects, Replace existing objects, Create new with timestamp if exists, Use Custom Parameters: Do not overwrite existing objects Objects on the target with the same name will not be overwritten. Replace existing objects Objects on the target with the same name will not be overwritten - even if the same object exists on the target. Create new with timestamp if exists If an Object on the target with the same name exists the object will be duplicated with a timestamp added to the name. The file extension will be kept:

For example: report1.txt exists on the target, then a new file with a timestamp as postfix will be created on the target; for example, report1_20220513_093057.txt. Replace existing objects Objects are always overwritten, even if same object exists already on the target. Use Custom Parameters Only the parameters in the field “Customer Parameters” will be applied (other Parameters will be ignored) |

| error-on-no-transfer | The error-on-no-transfer flag lets the task fail in case no transfer was done. |

Source Storage Type | Select a source storage type:

|

Target Storage Type | Select the target storage type:

|

Connection File | In the connection file you configure all required Parameters and Credentials to connect to the Source and Target Cloud Storage System. For example, if you want to transfer a file from AWS S3 to Azure Blob Storage, you must configure the connection Parameters for AWS S3 and Azure Blob Storage. For details on how to configure the Connection File, refer to section Configure the Connection File 137232401. |

Filter Type | [ include, exclude, none ] Define the type of filter to apply. |

Filter | Provide the Patterns for matching file matching; for example, in a copy action: Filter Type: include

For more examples on the filter matching pattern, refer to Rclone Filtering. |

Other Parameters | This field can be used to apply additional flag parameters to the selected action. For a list of all possible flags, refer to Global Flags. |

Dry-run | [ checked , unchecked ] Do a trial run with no permanent changes. |

UAC Rest Credentials | Universal Controller Rest API Credentials |

UAC Base URL | Universal Controller URL For example, https://192.168.88.40/uc |

Loglevel | Universal Task logging settings [DEBUG | INFO| WARNING | ERROR | CRITICAL] |

max-depth ( recursion depth ) | limits the recursion depth

Attention: If you change max-depth to a value greater than 1, a recursive action is performed. |

Example

The following example copies all file starting with report4 from the amazon s3 bucket stonebranchpm folder in to the Azure container: stonebranchpm.

No recursive copy will be done, because the flag --max-depth 1 is set.

Action: copyto

The Action copyto copies a single object from source to target and allows you to rename the object on the target.

Before running a move, moveto, copy, copyto, or sync command, you can always try the command by setting the Dry-run option in the Task.

Field | Description |

Agent | Linux or Windows Universal Agent to execute the Rclone command line. |

Agent Cluster | Optional Agent Cluster for load balancing |

Action | [ list directory, copy, copyto, list objects, move, moveto, sync, remove-object, remove-object-store, create-object-store, copy-url, monitor-object ] copyto copies an objects from source to target and optionally renames the file on the target. |

Write Options | [Do not overwrite existing objects, Replace existing objects, Create new with timestamp if exists, Use Custom Parameters} Do not overwrite existing objects Objects on the target with the same name will not be overwritten. Replace existing objects Objects on the target with the same name will not be overwritten - even if the same object exists on the target. Create new with timestamp if exists If an Object on the target with the same name exists the object will be duplicated with a timestamp added to the name. The file extension will be kept:

For example, report1.txt exists on the target, then a new file with a timestamp as postfix will be created on the target; for example, report1_20220513_093057.txt. Replace existing objects Objects are always overwritten, even if same object exists already on the target. Use Custom Parameters Only the parameters in the field “Customer Parameters” will be applied (other Parameters will be ignored) |

error-on-no-transfer | The error-on-no-transfer flag let the task fail in case no transfer was done. |

Source Storage Type | Select a source storage type:

|

Target storage Type | Select the target storage type:

|

Connection File | In the connection file, configure all required Parameters and Credentials to connect to the Source and Target Cloud Storage System. For example, if you want to transfer a file from AWS S3 to Azure Blob Storage, you must configure the connection Parameters for AWS S3 and Azure Blob Storage. For details on how to configure the Connection File, refer to section Configure the connection file. |

Other Parameters | This field can be used to apply additional flag parameters to the selected action. For a list of possible flags, refer to https://rclone.org/flags/. |

Dry-run | [ checked , unchecked ] Do a trial run with no permanent changes. |

UAC Rest Credentials | Universal Controller Rest API Credentials |

UAC Base URL | Universal Controller URL For example, https://192.168.88.40/uc |

Loglevel | Universal Task logging settings [DEBUG | INFO| WARNING | ERROR | CRITICAL] |

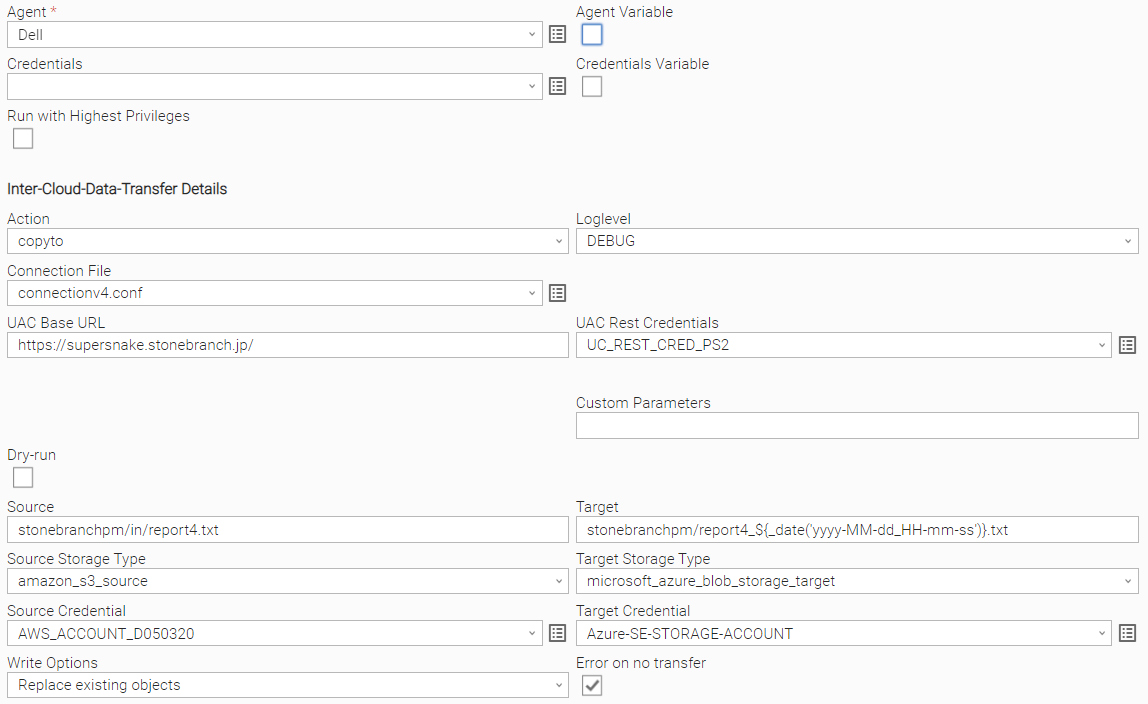

Example

The following example copies the file report4.txt from the amazon s3 bucket stonebranchpm folder in to the azure container stonebranchpm.

The file will be renamed at the target to: stonebranchpm/report4_${_date('yyyy-MM-dd_HH-mm-ss')}.txt; for example report4_2022-05-16_08-00-29.txt

Action: list objects

| Field | Description |

Agent | Linux or Windows Universal Agent to execute the Rclone command line. |

Agent Cluster | Optional Agent Cluster for load balancing. |

Action | [ list directory, copy, copyto, list objects, move, moveto, sync, remove-object, remove-object-store, create-object-store, copy-url, monitor-object ] List objects in an OS directory or cloud object store. |

| Storage Type | Select the storage type:

|

| Source Credential | Credential used for the selected Storage Type. |

Connection File | In the connection file you configure all required Parameters and Credentials to connect to the Source and Target Cloud Storage System. For example, if you want to transfer a file from AWS S3 to Azure Blob Storage, you must configure the connection Parameters for AWS S3 and Azure Blob Storage. For details on how to configure the Connection File, refer to section Configure the Connection File 137232401. |

Filter Type | [ include, exclude, none ] Define the type of filter to apply. |

Filter | Provide the Patterns for matching file matching; for example, in a copy action: Filter Type: include

For more examples on the filter matching pattern, refer to Rclone Filtering. |

Other Parameters | This field can be used to apply additional flag parameters to the selected action. For a list of all possible flags, refer to Global Flags. |

| Directory | Name of the directory you want to list the files in. For example, Directory: stonebranchpm/out would mean to list all objects in the bucket stonebranchpm folder out. |

| List Format | [ list size and path, list modification time, size and path, list objects and directories, list objects and directories (Json) ] The Choice box specifies how the output should be formatted. |

UAC Rest Credentials | Universal Controller Rest API Credentials. |

UAC Base URL | Universal Controller URL. For example, https://192.168.88.40/uc |

Loglevel | Universal Task logging settings [DEBUG | INFO| WARNING | ERROR | CRITICAL]. |

max-depth ( recursion depth ) | limits the recursion depth

|

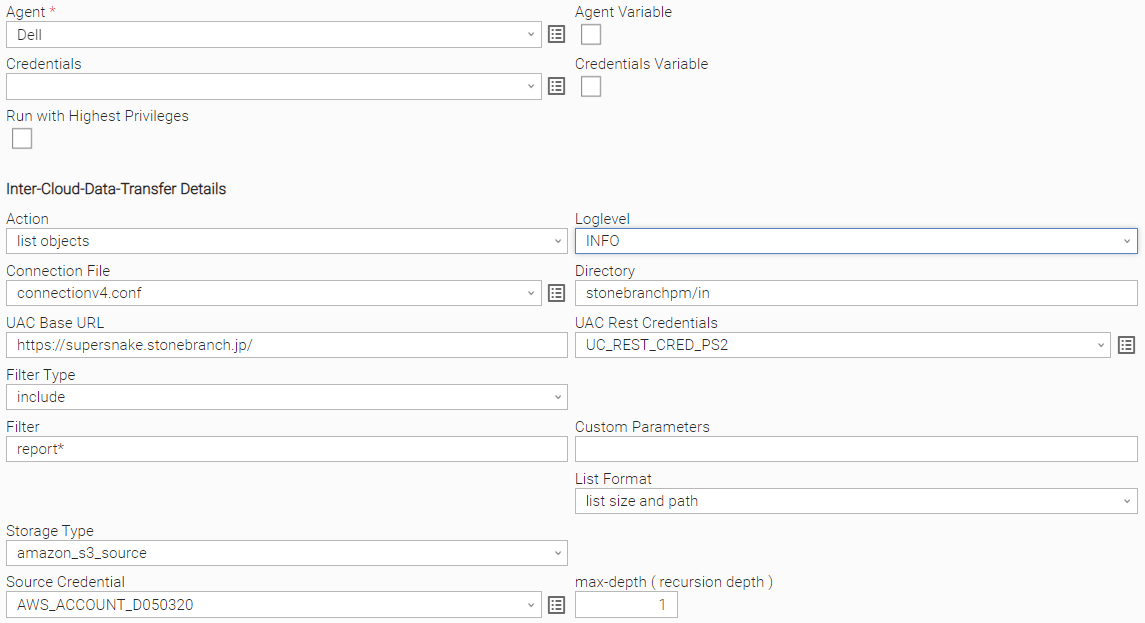

Example

The following example lists all objects starting with report in the S3 bucket stonebranchpm.

Action: move

| Field | Description |

Agent | Linux or Windows Universal Agent to execute the Rclone command line. |

Agent Cluster | Optional Agent Cluster for load balancing. |

Action | [ list directory, copy, copyto, list objects, move, moveto, remove-object, remove-object-store, create-object-store, copy-url, monitor-object ] move objects from source to target |

| error-on-no-transfer | The error-on-no-transfer flag lets the task fail in case no transfer was done. |

Source Storage Type | Select a source storage type:

|

Target storage Type | Select the target storage type:

|

Connection File | In the connection file you configure all required Parameters and Credentials to connect to the Source and Target Cloud Storage System. For example, if you want to transfer a file from AWS S3 to Azure Blob Storage, you must configure the connection Parameters for AWS S3 and Azure Blob Storage. For details on how to configure the Connection File, refer to section Configure the Connection File 137232401. |

Filter Type | [ include, exclude, none ] Define the type of filter to apply. |

Filter | Provide the Patterns for matching file matching; for example, in a copy action: Filter Type: include

For more examples on the filter matching pattern, refer to Rclone Filtering. |

Other Parameters | This field can be used to apply additional flag parameters to the selected action. For a list of all possible flags, refer to Global Flags. |

Dry-run | [ checked , unchecked ] Do a trial run with no permanent changes. |

UAC Rest Credentials | Universal Controller Rest API Credentials. |

UAC Base URL | Universal Controller URL. For example, https://192.168.88.40/uc |

Loglevel | Universal Task logging settings [DEBUG | INFO| WARNING | ERROR | CRITICAL]. |

max-depth ( recursion depth ) | limits the recursion depth

Attention: If you change max-depth to a value greater than 1, a recursive action is performed. |

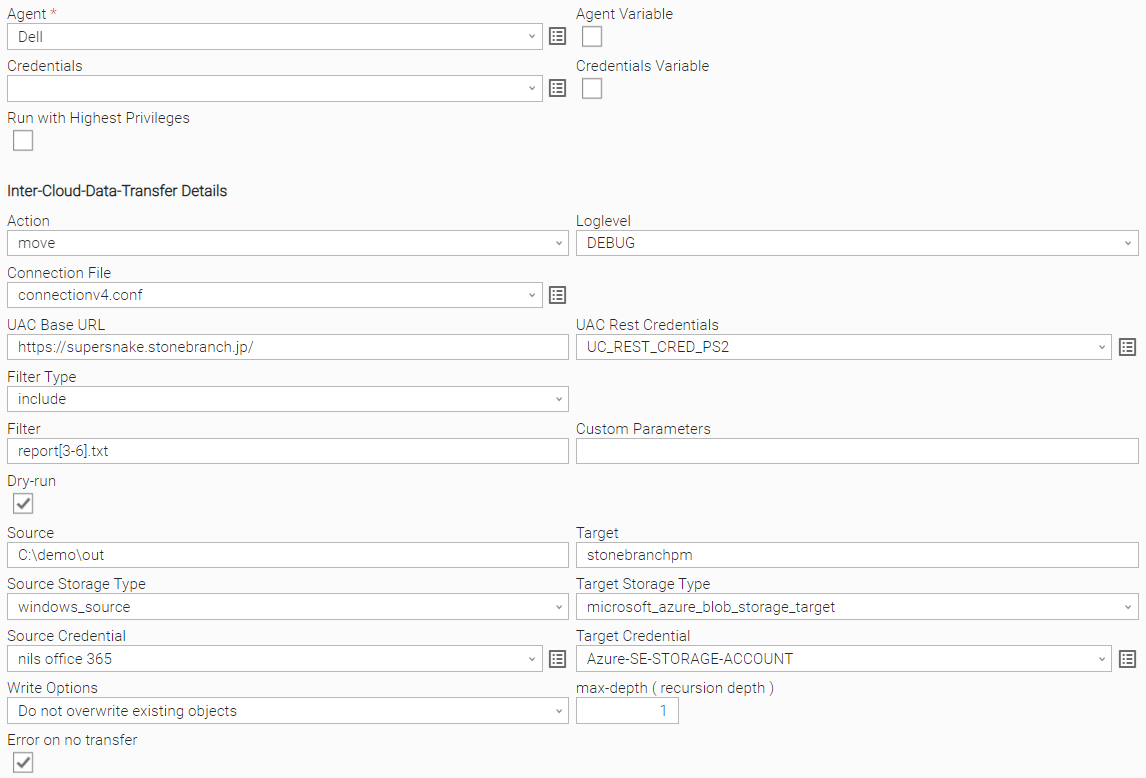

Example

The following example moves the objects matching report[3-6].txt from the Windows source directory C:\demo\out to the target Azure container stonebranchpm.

...

No recursive copy will be done, because max-depth is set to 1.

Action: moveto

The Action moveto, moves a single object from source to target and allows to rename the object on the target.

Before running a move, moveto, copy, copyto, or sync command, you can always try the command by setting the Dry-run option in the Task.

Field | Description |

Agent | Linux or Windows Universal Agent to execute the Rclone command line. |

Agent Cluster | Optional Agent Cluster for load balancing. |

Action | [ list directory, copy, copyto, list objects, move, moveto, sync, remove-object, remove-object-store, create-object-store, copy-url, monitor-object ] Move objects from source to target. |

error-on-no-transfer | The error-on-no-transfer flag lets the task fail in case no transfer was done. |

Source Storage Type | Select a source storage type:

|

Target storage Type | Select the target storage type:

|

Connection File | In the connection file you configure all required Parameters and Credentials to connect to the Source and Target Cloud Storage System. For example, if you want to transfer a file from AWS S3 to Azure Blob Storage, you would need to configure the connection Parameters for AWS S3 and Azure Blob Storage. for details on how to configure the Connection File refer to section Configure the connection file |

Other Parameters | This field can be used to apply additional flag parameters to the selected action. For a list of all possible flags refer to: https://rclone.org/flags/ |

Dry-run | [ checked , unchecked ] Do a trial run with no permanent changes |

UAC Rest Credentials | Universal Controller Rest API Credentials |

UAC Basse URL | Universal Controller URL For example, https://192.168.88.40/uc |

Loglevel | Universal Task logging settings [DEBUG | INFO| WARNING | ERROR | CRITICAL] |

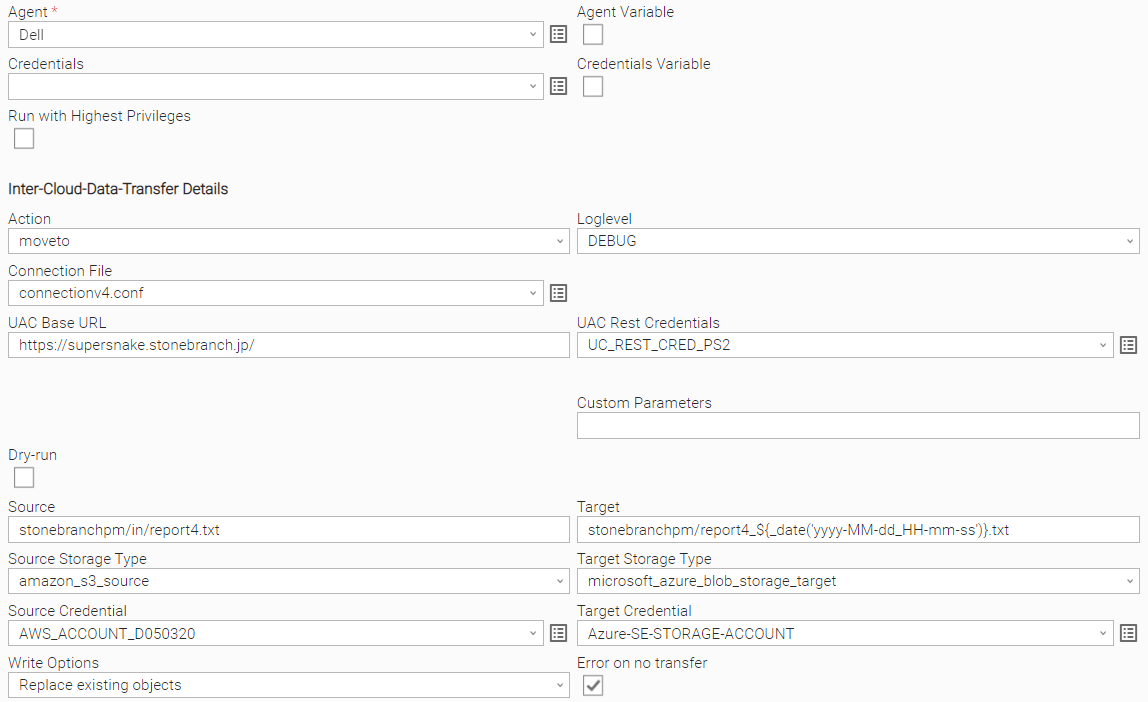

Example

The following example moves the file report4.txt from the amazon s3 bucket stonebranchpm folder in to the azure container stonebranchpm.

The file will be renamed at the target to: stonebranchpm/report4_${_date('yyyy-MM-dd_HH-mm-ss')}.txt; for example, report4_2022-05-16_08-00-29.txt

Action: sync

Before running a move, moveto, copy, copyto, or sync command, you can always try the command by setting the Dry-run option in the Task.

Field | Description |

Agent | Linux or Windows Universal Agent to execute the Rclone command line. |

Agent Cluster | Optional Agent Cluster for load balancing. |

Action | [ list directory, copy, copyto, list objects, move, moveto, sync, remove-object, remove-object-store, create-object-store, copy-url, monitor-object ] Sync the source to the destination, changing the destination only. |

Write Options | [Do not overwrite existing objects, Replace existing objects, Create new with timestamp if exists, Use Custom Parameters} Do not overwrite existing objects Objects on the target with the same name will not be overwritten. Replace existing objects Objects on the target with the same name will not be overwritten - even if the same object exists on the target. Create new with timestamp if exists If an Object on the target with the same name exists the object will be duplicated with a timestamp added to the name. The file extension will be kept:

For example: report1.txt exists on the target, then a new file with a timestamp as postfix will be created on the target; for example, report1_20220513_093057.txt. Replace existing objects Objects are always overwritten, even if same object exists already on the target. Use Custom Parameters Only the parameters in the field “Customer Parameters” will be applied (other Parameters will be ignored). |

error-on-no-transfer | The error-on-no-transfer flag let the task fail in case no transfer was done. |

Source Storage Type | Select a source storage type:

|

Target storage Type | Select the target storage type:

|

Connection File | In the connection file, configure all required Parameters and Credentials to connect to the Source and Target Cloud Storage System. For example, if you want to transfer a file from AWS S3 to Azure Blob Storage, you must configure the connection Parameters for AWS S3 and Azure Blob Storage. For details on how to configure the Connection File, refer to section Configure the connection file. |

Filter Type | [ include, exclude, none ] Define the type of filter to apply. |

Filter | Provide the Patterns for file matching; for example, in a sync action: Filter Type: include Filter r This means all reports with names matching For more examples on the filter matching pattern, refer to https://rclone.org/filtering/ . |

Other Parameters | This field can be used to apply additional flag parameters to the selected action. For a list of possible flags, refer to https://rclone.org/flags/. |

Dry-run | [ checked , unchecked ] Do a trial run with no permanent changes. |

UAC Rest Credentials | Universal Controller Rest API Credentials. |

UAC Base URL | Universal Controller URL. For example, https://192.168.88.40/uc. |

Loglevel | Universal Task logging settings [DEBUG | INFO| WARNING | ERROR | CRITICAL] |

max-depth ( recursion depth ) | Limits the recursion depth

Attention: If you change max-depth to a value greater than 1, a recursive action is performed. |

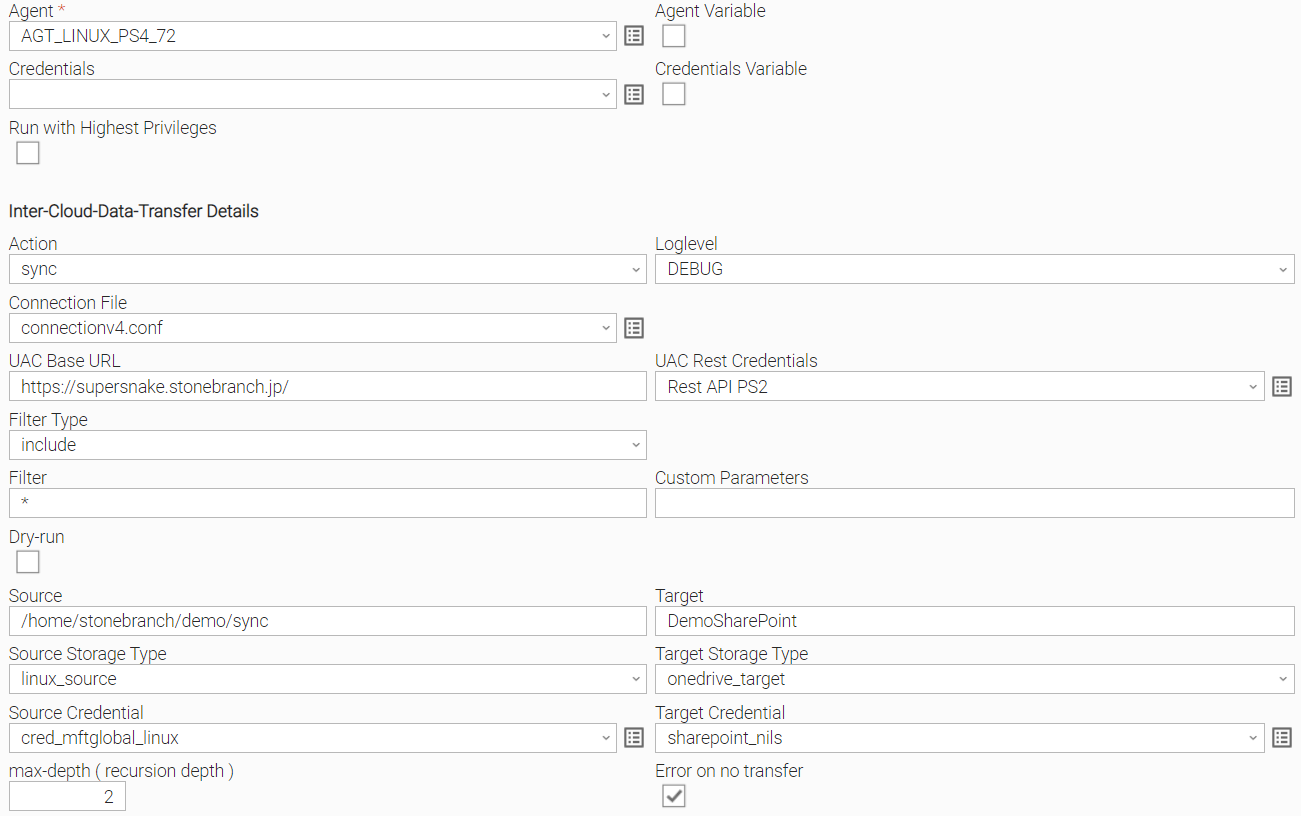

Example

The following example synchroizes all files and folders from the Linux source: /home/stonebranch/demo/sync to the target SharePoint folder DemoSharePoint.

One Level of subfolders and files in the directory /home/stonebranch/demo/sync will be considered for the sync ation, because max-depth is set to 2. max-depth 2 means that the current folder plus one subfolder level is synced from the source to the target.

Action: remove-object

| Field | Description |

Agent | Linux or Windows Universal Agent to execute the Rclone command line. |

Agent Cluster | Optional Agent Cluster for load balancing. |

Action | [ list directory, copy, copyto, list objects, move, moveto, sync, remove-object, remove-object-store, create-object-store, copy-url, monitor-object ] Remove objects in an OS directory or cloud object store. |

Storage Type | Select the storage type:

|

| File Path | Path to the directory in which you want to remove the objects. For example: File Path: stonebranchpmtest Filter: report[1-3].txt This removes all S3 objects matching the filter: report[1-3].txt( report1.txt, report2.txt and report3.txt ) from the S3 bucket stonebranchpmtest. |

Filter Type | [ include, exclude, none ] Define the type of filter to apply. |

Filter | Provide the Patterns for file matching; for example, in a sync action: Filter Type: include Filter r This means all reports with names matching For more examples on the filter matching pattern, refer to https://rclone.org/filtering/ . |

Connection File | In the connection file you configure all required Parameters and Credentials to connect to the Source and Target Cloud Storage System. For example, if you want to transfer a file from AWS S3 to Azure Blob Storage, you must configure the connection Parameters for AWS S3 and Azure Blob Storage. For details on how to configure the Connection File, refer to section Configure the Connection File 137232401. |

Other Parameters | This field can be used to apply additional flag parameters to the selected action. For a list of all possible flags, refer to Global Flags. Recommendation: Add the flag max-depth 1 to all Copy, Move, remove-object and remove-object-store in the task field Other Parameters to avoid a recursive action. Attention: If the flag max-depth 1 is not set, a recursive action is performed. |

Dry-run | [ checked , unchecked ] Do a trial run with no permanent changes. |

UAC Rest Credentials | Universal Controller Rest API Credentials. |

UAC Base URL | Universal Controller URL. For example, https://192.168.88.40/uc |

Loglevel | Universal Task logging settings [DEBUG | INFO| WARNING | ERROR | CRITICAL]. |

max-depth ( recursion depth ) | Limits the recursion depth

Attention: If you change max-depth to a value greater than 1, a recursive action is performed. |

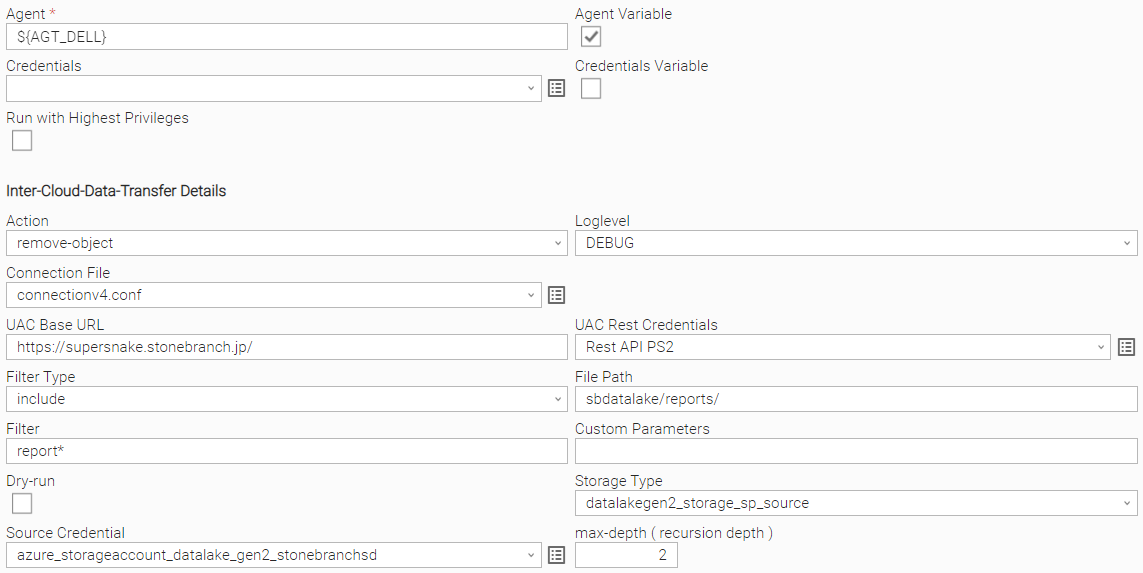

Example

The following example removes all s3 objects starting with report* from the Azure container sbdatalake folder reports.

If the folder reports would contain a subfolder with an object starting with report, then that object would also be deleted, because max-depth is set to 2. max-depth 2 means that the current folder plus one subfolder level is in scope for the remove-object action.

Action: remove-object-store

| Field | Description |

Agent | Linux or Windows Universal Agent to execute the Rclone command line. |

Agent Cluster | Optional Agent Cluster for load balancing. |

Action | [ list directory, copy, copyto, list objects, move, moveto, sync, remove-object, remove-object-store, create-object-store, copy-url, monitor-object ] Remove an OS directory or cloud object store. |

Storage Type | Select the storage type:

|

| Source Credential | Credential used for the selected Storage Type. |

| Directory | Name of the directory you want to remove. The directory can be an object store or a file system OS directory. The directory needs to be empty before it can be removed. For example, Directory: stonebranchpmtest would remove the bucket stonebranchpmtest. |

Connection File | In the connection file, you configure all required Parameters and Credentials to connect to the Source and Target Cloud Storage System. For example, if you want to transfer a file from AWS S3 to Azure Blob Storage, you must configure the connection Parameters for AWS S3 and Azure Blob Storage. For details on how to configure the Connection File, refer to section Configure the Connection File 137232401. |

Other Parameters | This field can be used to apply additional flag parameters to the selected action. For a list of all possible flags, refer to Global Flags. |

Dry-run | [ checked , unchecked ] Do a trial run with no permanent changes. |

UAC Rest Credentials | Universal Controller Rest API Credentials. |

UAC Base URL | Universal Controller URL. For example, https://192.168.88.40/uc |

Loglevel | Universal Task logging settings [DEBUG | INFO| WARNING | ERROR | CRITICAL]. |

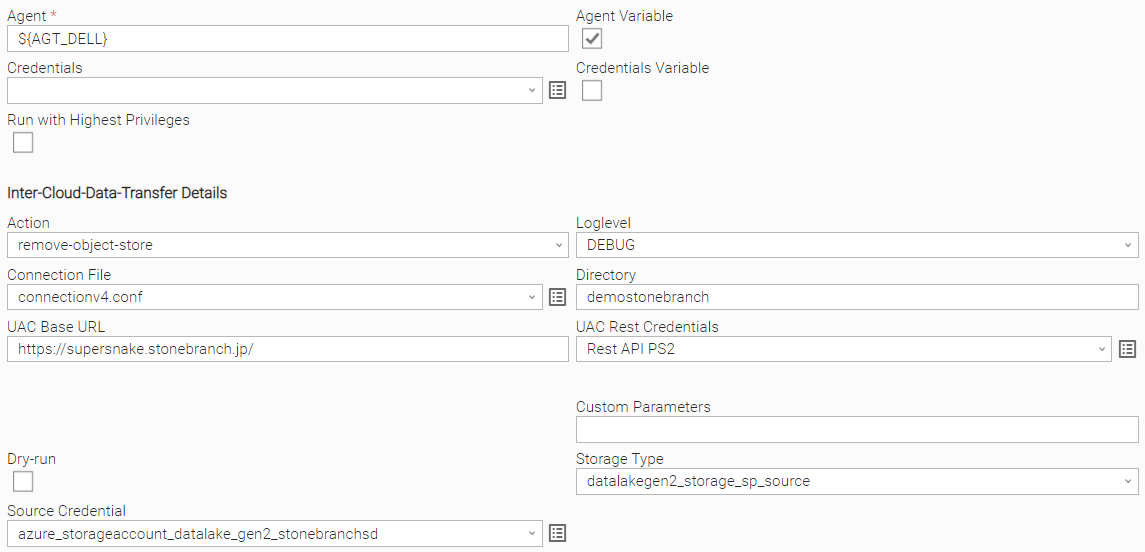

Example

The following example removes the Azure container demostonebranch.

The container must be empty in order to remove it.

Action: create-object-store

| Field | Description |

Agent | Linux or Windows Universal Agent to execute the Rclone command line. |

Agent Cluster | Optional Agent Cluster for load balancing. |

Action | [ list directory, copy, copyto, list objects, move, moveto, sync, remove-object, remove-object-store, create-object-store, copy-url, monitor-object ] Create an OS directory or cloud object store. |

Storage Type | Select the storage type:

|

| Source Credential | Credential used for the selected Storage Type. |

Connection File | In the connection file, you configure all required Parameters and Credentials to connect to the Source and Target Cloud Storage System. For example, if you want to transfer a file from AWS S3 to Azure Blob Storage, you must configure the connection Parameters for AWS S3 and Azure Blob Storage. For details on how to configure the Connection File, refer to section Configure the Connection File 137232401. |

| Directory | Name of the directory you want to create. The directory can be an object store or a file system OS directory. For example, Directory: stonebranchpmtest would create the bucket stonebranchpmtest. |

Other Parameters | This field can be used to apply additional flag parameters to the selected action. For a list of all possible flags, refer to Global Flags. |

Dry-run | [ checked , unchecked ] Do a trial run with no permanent changes. |

UAC Rest Credentials | Universal Controller Rest API Credentials. |

UAC Base URL | Universal Controller URL. For example, https://192.168.88.40/uc |

Loglevel | Universal Task logging settings [DEBUG | INFO| WARNING | ERROR | CRITICAL]. |

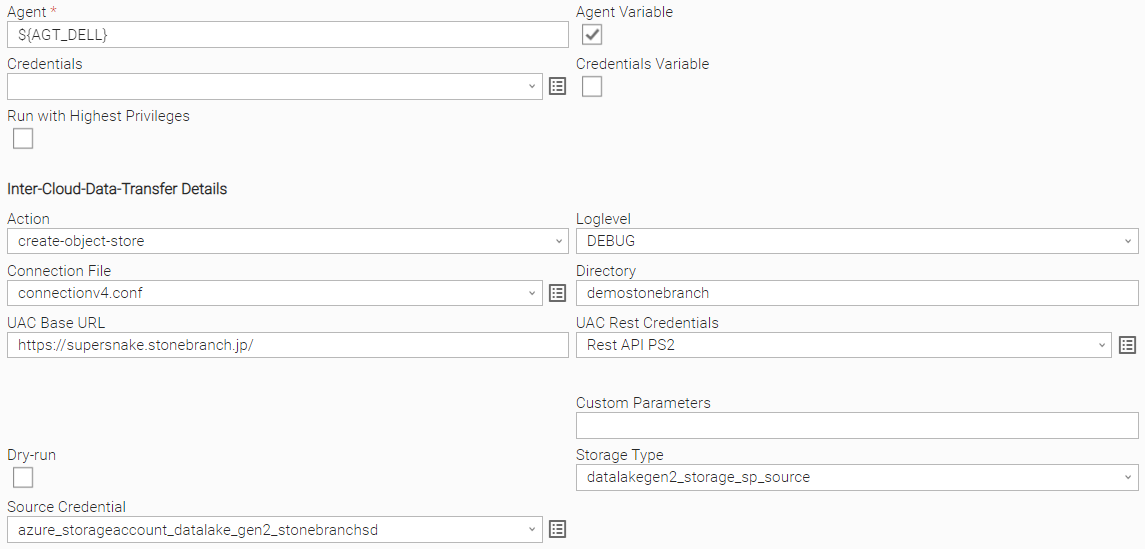

Example

The following example creates the Azure container demostonebranch.

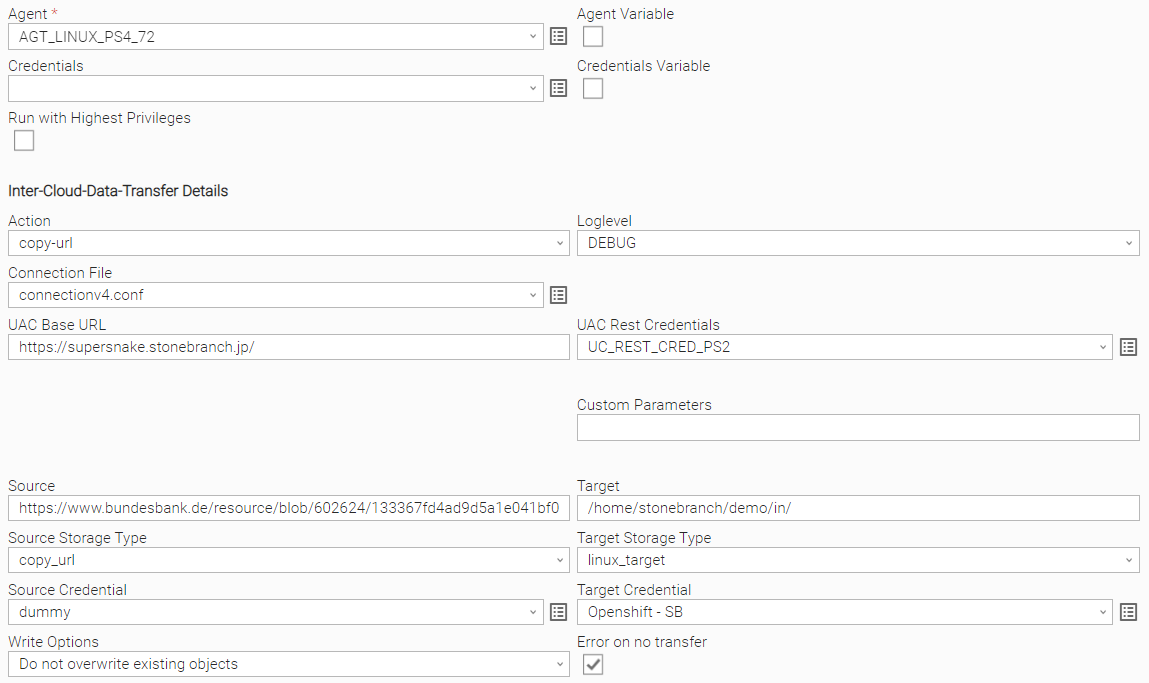

Action: copy-url

| Field | Description | |||||||

Agent | Linux or Windows Universal Agent to execute the Rclone command line. | |||||||

Agent Cluster | Optional Agent Cluster for load balancing. | |||||||

Action | [ list directory, copy, copyto, list objects, move, moveto, sync, remove-object, remove-object-store, create-object-store, copy-url, monitor-object ] Download a URL's content and copy it to the destination without saving it in temporary storage. | |||||||

Source | URL parameter. Download a URL's content and copy it to the destination without saving it in temporary storage. | |||||||

| Storage Type | For the action copy_url, the value must be copy_url. | |||||||

Source Credential | Credential used for the selected Storage Type | |||||||

Target | Enter a target storage type name as defined in the Connection File. For example, amazon_s3, microsoft_azure_blob_storage, hdfs, onedrive, linux .. For a list of all possible storage types, refer to Overview of Cloud Storage Systems. | |||||||

Connection File | In the connection file you configure all required Parameters and Credentials to connect to the Source and Target Cloud Storage System. For example, if you want to transfer a file from AWS S3 to Azure Blob Storage, you must configure the connection Parameters for AWS S3 and Azure Blob Storage. For details on how to configure the Connection File, refer to section Configure the Connection File 137232401. | |||||||

Other Parameters | This field can be used to apply additional flag parameters to the selected action. For a list of all possible flags, refer to Global Flags. Useful parameters for the copy-url command:

| |||||||

Dry-run | [ checked , unchecked ] Do a trial run with no permanent changes. | |||||||

UAC Rest Credentials | Universal Controller Rest API Credentials | |||||||

UAC Base URL | Universal Controller URL For example, https://192.168.88.40/uc | |||||||

Loglevel | Universal Task logging settings [DEBUG | INFO| WARNING | ERROR | CRITICAL] |

Example

The following example downloads a PDF file:

...

The Linux folder is located on the server where the Agent ${AGT_LINUX} runs.

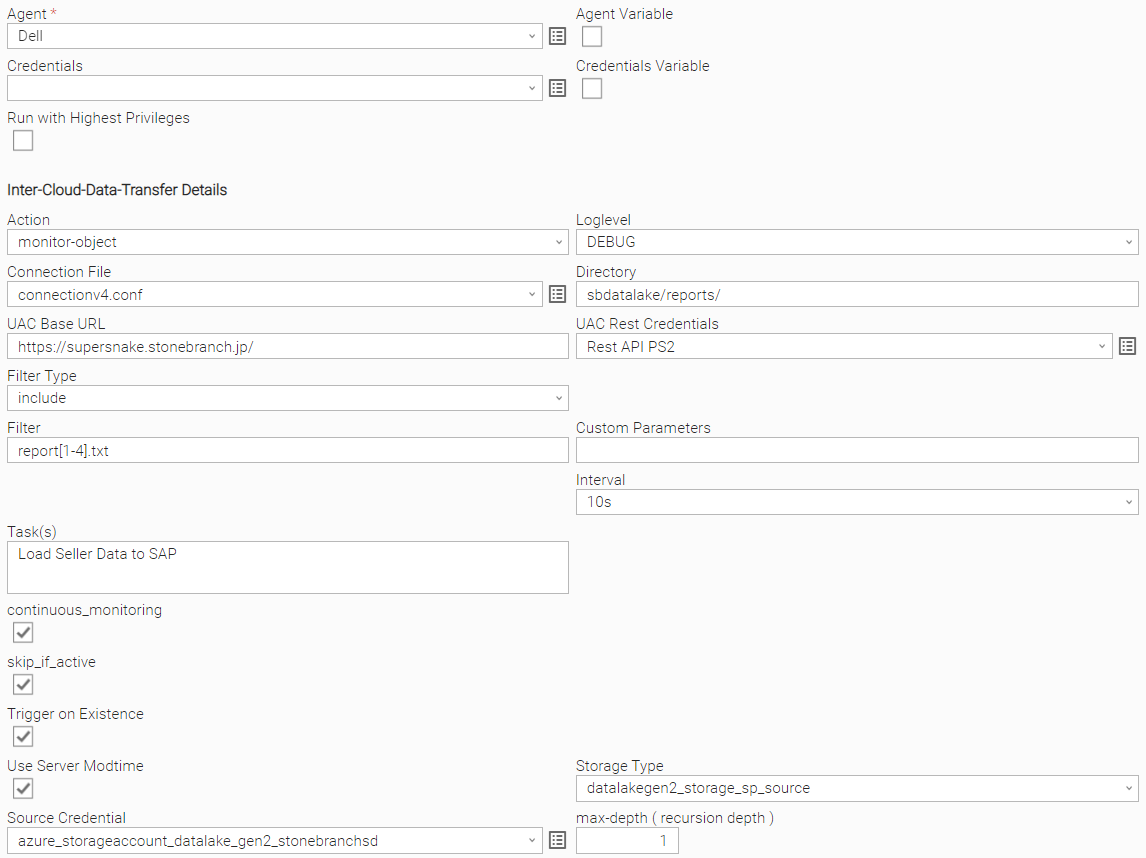

Action: monitor-object

| Field | Description |

Agent | Linux or Windows Universal Agent to execute the Rclone command line. |

Agent Cluster | Optional Agent Cluster for load balancing. |

Action | [ list directory, copy, list objects, move, remove-object, remove-object-store, create-object-store, copy-url, monitor-object ] Monitor a file or object in an OS directory or cloud object store. |

Storage Type | Select the storage type:

|

| Source Credential | Credential used for the selected Storage Type |

| Directory | Name of the directory to scan for the files to monitor. The directory can be an object store or a file system OS directory. For example: Directory: stonebranchpm/out This would monitor in the s3 bucket folder stonebranchpm/out for the object report1.txt. |

Connection File | In the connection file you configure all required Parameters and Credentials to connect to the Source and Target Cloud Storage System. For example, if you want to transfer a file from AWS S3 to Azure Blob Storage, you must configure the connection Parameters for AWS S3 and Azure Blob Storage. For details on how to configure the Connection File, refer to section Configure the Connection File 137232401. |

Filter Type | [ include, exclude, none ] Define the type of filter to apply. |

Filter | Provide the Patterns for matching file matching; for example, in a copy action: Filter Type: include Filter This means all reports with names matching For more examples on the filter matching pattern, refer to Rclone Filtering. |

Other Parameters | This field can be used to apply additional flag parameters to the selected action. For a list of all possible flags, refer to Global Flags. |

| continuous monitoring | [ checked , unchecked] After the monitor has identified a matching file it continuous to monitor for new files. |

| skip_if_active | checked , unchecked] If the monitor has launched a Task(s), it will only launch a new Task(s) if the launched Tasks(s) are not active anymore. |

Trigger on Existence | [ checked , unchecked] If checked, the monitor goes to success even if the file already exists when it was started. |

| User Server Modtime | Use Server Modtime tells the Monitor to use the upload time to the AWS bucket instead of the last modification time of the file on the source (for example, Linux). |

| Tasks | Comma-separated list of Tasks, which will be launched when the montoring criteria are met. If the field is empty, no Task(s) will be launched. In this case, the Task acts as a pure monitor. For example, Task1,Task2,Task3 |

| Interval | [ 10s, 60s, 180s ] Monitor interval to check of the file(s) in the configured directory. For example, Interval: 60s, would be mean that every 60s, the task checks if the file exits in the scan directory. |

UAC Rest Credentials | Universal Controller Rest API Credentials |

UAC Base URL | Universal Controller URL For example, https://192.168.88.40/uc |

Loglevel | Universal Task logging settings [DEBUG | INFO| WARNING | ERROR | CRITICAL] |

max-depth ( recursion depth ) | Limits the recursion depth

Attention: If you change max-depth to a value greater than 1, a recursive action is performed. |

Example

The following example monitors the Azure Datalake Gen2 storage container sbdatalake folder /reports/ for all object matching report[1-4].txt ( report1.txt, report2.txt, report3.txt, report4.txt).

...

If the monitor has launched the Task Load Seller Data to SAP , it will only launch a new Task “Load Seller Data to SAP” if the launched Tasks is not active anymore.

Inter-Cloud Data Transfer Example Cases

The following section provides examples for the Inter-Cloud Data Transfer Task actions:

Copy

Move

Remove-object

Monitor-object

...

N#

...

Name

...

Copy

...

C1

...

Copy a file to target. The file does not exist at the target.

...

C2

...

Copy two files. One of files already exists at the target.

...

C3

...

Copy a file to a target with Parameter ignore-existing set. A file with the same filename exists on the target.

...

C4

...

Copy a file to a target, which already exists with Parameter error-on-no-transfer set.

...

C5

...

Copy all files except one, from one folder into another folder.

...

C6

...

Copy non-recursively a file from a folder into another folder using the parameter max-depth 1.

...

C7

...

Copy files using multiple Parameters.

...

Move

...

M1

...

Move without deleting if no transfer took place.

...

M2

...

Move a file with same source and target - config error case.

...

Remove-object

...

R1

...

Delete a file in a specific folder by using the parameter: max-depth 1.

...

R2

...

Delete all files matching a given name recursively in all folders starting from a given entry folder.

...

Monitor-object

...

FM01

...

Monitor a file in AWS - file does not exist on start of monitor.

...

FM02

...

Monitor a file in AWS - file exists on start of monitor.

Copy

The following file transfer examples will be described for the Copy action:

...

N#

...

Name

...

C1

...

Copy a file to target. The file does not exist at the target.

...

C2

...

Copy two files. One of files already exists at the target.

...

C3

...

Copy a file to a target with Parameter ignore-existing set. A file with the same filename exists on the target.

...

C4

...

Copy a file to a target, which already exists with Parameter error-on-no-transfer set.

...

C5

...

Copy all files except one, from one folder into another folder.

...

C6

...

Copy non-recursively a file from a folder into another folder using the parameter max-depth 1.

...

C7

...

Copy files using multiple Parameters.

| Note | ||

|---|---|---|

| ||

Before running a copy command, you can always try the command by setting the Dry-run option in the Task. |

Copy a File to Target: File does not Exist at the Target

Copy the file report1.txt to target stonebranchpm/in/. The file does not exist at the target.

Configuration

Source Storage Type: linux_source

Source: /home/stonebranch/demo/out/

Target Storage Type: amazon_s3_target

Target: stonebranchpm/in/

Filter Type: include

Filter: report3.txt

Other Parameters: none

Result

Task Status: success

Output:

report1.txt: Copied(new)

Copy Two Files:. One File Already Exists at the Target

Copy two files report2.txt and report3.txt to the target stonebranchpm/in/. The file report2.txt (same size and content) already exists at the target.

Configuration

Source Storage Type: linux_source

Source: /home/stonebranch/demo/out/

Target Storage Type: amazon_s3_target

Target: stonebranchpm/in/

Filter Type: include

Filter: report[2-3].txt

Other Parameters: none

Result

Task Status: success

Output:

report3.txt: Copied (new)report2.txt: Unchanged skipping

| Note | ||

|---|---|---|

| ||

If the file report2.txt would have the same name, but a different size, than the task would overwrite the file report2.txt on the target. |

Copy a File to a Target with Parameter ignore-existing Set. A File with the Same filename Exists on the Target.

Copy a file report2.txt to the target stonebranchpm/in/ with Parameter ignore-existing set . A file with the same filename exists at the target (size or content can be the same or different).

When ignore-existing is set, all files that exist on destination with the same filename are skipped. (No overwrite is done.)

Configuration

Source Storage Type: linux_source

Source: /home/stonebranch/demo/out/

Target Storage Type: amazon_s3_target

Target: stonebranchpm/in/

Filter Type: include

Filter: report2.txt

Other Parameters: --ignore-existing

Result

Task Status: failure

Output:

There was nothing to transfer.Exiting due to ERROR(s).

Copy a File to a Target, which Already Exists with Parameter Flag error-on-no-transfer Set.

Copy a file report2.txt to a target, which already exists with Parameter error-on-no-transfer set .

When error-on-no-transfer is set, the task will fail in case no file is transferred.

Configuration

Source Storage Type: linux_source

Source: /home/stonebranch/demo/out/

Target Storage Type:

amazon_s3_targetTarget: stonebranchpm/in/

Filter Type: include

Filter:

report2.txtOther Parameters: --error-on-no-transfer

Result

...

Task Status: failure

Output:

report2.txt: Unchanged skipping

...

There was nothing to transfer

...

Exiting due to ERROR(s).

Copy all Files except One, from One Folder into Another Folder

Copy contents of folder in into folder out excluding the file index.html.

| Note | ||

|---|---|---|

| ||

If the folder out does not exist, it would be created. |

Configuration

Source Storage Type: linux_source

Source: stonebranchpmtest/in

Target Storage Type:

amazon_s3_targetTarget: stonebranchpmtest/out

Filter Type: exclude

Filter:

index.htmlOther Parameters: none

| Code Block | ||

|---|---|---|

| ||

stonebranchpmtest

├── in

│ ├── report1.txt

│ └── report2.txt

│ ├── sub01

│ ├── report1.txt <- this file will be copied incl. directory sub01

│ └── index.html <- will not be copied, because of the exclude filter

├── out

│ └── report10.txt

After execution of the delete action:

├── out

│ ├── report1.txt

│ └── report2.txt

│ ├── sub01

│ ├── report1.txt <- this file will be copied incl. directory sub01

│ └── report10.txt |

Result

Task Status: success

Output:

report1.txt: Copied (new)report2.txt: Copied (new)sub1/report1.txt: Copied (new)

Copy Non-Recursively a File from a Folder into Another Folder by Using the Parameter max-depth 1.

Copy the file report1.txt from the folder stonebranchpmtest/in into folder stonebranchpmtest2/. The Parameter max-depth 1 is used to copy non-recursively.

max-depth limits the recursion depth (default -1).

max-depth 1 means only the current directory is in scope.

| Note | ||

|---|---|---|

| ||

The folder in will not be created on the target; only the file is copied to the provided target directory. |

Configuration

Source Storage Type: amazon_s3_source

Source: stonebranchpmtest/in

Target Storage Type:

amazon_s3_targetTarget: stonebranchpmest2/out

Filter Type: include

Filter:

report1.txtOther Parameters: --max-depth 1

| Code Block | ||

|---|---|---|

| ||

stonebranchpmtest

├── in

│ ├── report1.txt <- this file will be copied

│ ├── report2.txt

│ ├── sub01

│ ├── report1.txt <- this file will not be copied, because of --max-depth 1

├── report1.txt <- this file will not be copied

stonebranchpmtest2

├── report10.txt

After execution of the delete action:

stonebranchpmtest2

├── report1.txt

├── report10.txt |

Result

Task Status: success

Output:

report1.txt: Copied (new)

Copy Files Using Multiple Parameters

It is possible to provide multiple Parameters in the Other Parameters field of the Task.

Example:

Parameters: --max-depth 1 --error-on-no-transfer

In this case, both Parameters are applied.

Configuration

Source Storage Type: amazon_s3_source

Source: stonebranchpmtest/in

Target Storage Type:

amazon_s3_targetTarget: stonebranchpmest2/out

Filter Type: include

Filter:

report1.txtOther Parameters: --max-depth 1, --error-on-no-transfer

Result

The Parameters: --max-depth 1 and --error-on-no-transfer are both applied.

Move

The following file transfer examples will be described for the Move action:

...

N#

...

Name

...

M1

...

Move without deleting if no transfer took place.

...

M2

...

Move a file with same source and target - config error case.

| Note | ||

|---|---|---|

| ||

Before running a Move command, you can always try the command by setting the Dry-run option in the Task. |

Move Without Deleting if no Transfer Took Place

If you want only the source file to be deleted when a file transfer took place, then add the flag ignore-existing in the field Other Parameters

Example: You move a file to a destination that already exists. In this case, rclone will not perform the copy but deletes the source file.

If you set the flag --ignore-existing, the delete operation will not be performed.

| Note | ||

|---|---|---|

| ||

|

Configuration

Source Storage Type: linux_source

Source: /home/stonebranch/demo/out/

Target Storage Type: amazon_s3_target

Target: stonebranchpm/in/

Filter Type: include

Filter: report4.txt

Other Parameters: --ignore-existing, --max-depth 1, --error-on-no-transfer

Result

Task Status: failed

The file

report4.txtis not deleted at the source “/home/stonebranch/demo/out/”

Output:

There was nothin to transfer- Return Code: 9

Exiting due to ERROR(s).

Move a File with Same Source and Target - config error Case

Accidently, the source has been configured the same as the target.

The --ignore-existing parameter avoids that a file is deleted, if the source is equal to the destination in a copy operation.

The --ignore-existing parameter avoids that a file is deleted, if the source is equal to the destination in a copy operation.

The max-depth 1 parameter avoids a recursive move of files.

Configuration

Source Storage Type: linux_source

Source: /home/stonebranch/demo/out/

Target Storage Type:

linux_sourceTarget: /home/stonebranch/demo/out/

Filter Type: include

Filter:

report4.txtOther Parameters: --ignore-existing, --max-depth 1

Result

Task Status: success (because --error-on-no-transfer was not set)

The file

report4.txtis not deleted.

Output:

There was nothin to transfer- Return Code: 0

Remove-object

The following file examples will be described for the Remove-object action:

...

N#

...

Name

...

R1

...

Delete a file in a specific folder by using the parameter max-depth 1.

...

R2

...

Delete all files matching a given name recursively in all folders starting from a given entry folder.

| Note | ||

|---|---|---|

| ||

Before running a remove-object command, you can always try the command by setting the Dry-run option in the Task. |

Delete a File in a Specific Folder by Using the Parameter max-depth 1

Delete only the report1.txt in the folder stonebranchpmtest/in/

To delete a specific file in a folder, provide the File Path to the file to delete and provide the Parameter: --max-depth 1 in the Other Parameters field.

max-depth limits the recursion depth (default -1).

max-depth 1 means only the current directory is in scope.

Configuration

File Path: stonebranchpmtest/in/

Storage Type:

amazon_s3_sourceFilter Type: include

Filter:

report1.txtOther Parameters: --max-depth 1

| Code Block | ||

|---|---|---|

| ||

stonebranchpmtest

├── in

│ ├── report1.txt <- this file will be deleted

│ ├── report2.txt

│ ├── sub01

│ ├── report1.txt <- this file will not be deleted because of --max-depth 1

├── report1.txt

After execution of the delete action:

stonebranchpmtest

├── in

│ ├── report2.txt

│ ├── sub01

│ ├── report1.txt

├── report1.txt

|

Result

Task Status: success

Output:

report1.txt Deleted

Delete all Files Matching a Given Name Recursively in all Folders Starting from a Given Entry Folder

Delete report1.txt recursively in all folders starting from the given entry folder stonebranchpmtest/ will be deleted.

Configuration

File Path: stonebranchpmtest/

Storage Type:

amazon_s3_sourceFilter Type: include

Filter:

report1.txtOther Parameters: none

| Code Block | ||

|---|---|---|

| ||

stonebranchpmtest

├── in

│ ├── report1.txt <- this file will be deleted

│ ├── report2.txt

│ ├── sub01

│ ├── report1.txt <- this file be deleted

├── report1.txt <- this file be deleted

After execution of the delete action:

stonebranchpmtest

├── in

│ ├── report2.txt

│ ├── sub01

│ ├──

├── |

Result

Task Status: success

Output:

in/report1.txt Deletedin/sub1/report1.txt Deletedreport1.txt Deleted

Monitor-object

The following examples describe how file monitoring can be performed.

...

N#

...

Name

...

FM01

...

Monitor a file in AWS - file does not exist on start of monitor.

...

FM02

...

Monitor a file in AWS - file exists on start of monitor.

| Note | ||

|---|---|---|

| ||

Before running a monitor-object command, you can always try the command by setting the Dry-run option in the Task. |

Monitor a File in AWS - File does not Exist on Start of Monitor

With the Parameter Flag --use-server-modtime, you can tell the Monitor to use the upload time to the AWS bucket instead of the last modification time of the file on the source (for exxample, Linux).

max-depth 1 means only the current directory is in scope.

Configuration

Directory: stonebranchpmtest/in/

Storage Type:

amazon_s3_sourceFilter Type: include

Filter:

test3.txtInterval: 10s

Trigger on Existence: no

Other Parameters: --max-depth 1, --use-server-modtime

Result

Task Status: running

The monitor will stay in running until the file

test3.txtis copied to stonebranchpmtest/inOutput:

INFO - REST SB library version 1.0INFO - ############ Monitor Objects Action ###############INFO - ############ List Objects Action ###############INFO - sleeping for 10 seconds now

Monitor a File in AWS - File Exists on Start of Monitor

With the Parameter Flag --use-server-modtime, you can tell the Monitor to use the upload time to the AWS bucket instead of the last modification time of the file on the source (for example, Linux).

max-depth 1 means only the current directory is in scope.

Configuration

Source Storage Type: linux_source

Source: /home/stonebranch/demo/out/

Target Storage Type:

amazon_s3_targetTarget: stonebranchpm/in/

Filter Type: include

Filter:

report10.txtTrigger on Existence: yes

Other Parameters: --ignore-existing, --max-depth 1, --error-on-no-transfer

Result

...

Task Status: success

...

The task goes to success because the file report10.txt already exists in stonebranchpmtest/in ( trigger on existence is set to true ).

Output:

...

###### object in stonebranchpmtest/in/ ######

...

[{"Path":"report10.txt","Name":"report10.txt","Size":15,"MimeType":"text/plain","ModTime":"2021-12-08T16:26:18.000000000Z","IsDir":false,"Tier":"STANDARD"}]

...